Advantages of Using robots.txt in Magento

Another question is what for such robots are needed. Search engines tend to send tiny spiders to search your site to receive information in return. It is done to get your pages indexed in the search results. Well, Robots.txt is the best way to let search engines know where they are not encouraged to index. Except for the search engines, these robots can execute a specific function automatically for HTML and link validation. This file’s goal is to hide site’s Javascript, SID parameters, and prevent content duplication. It helps to improve your Magento SEO and reduce the amount of server resources. At last, these programs help with reducing the footprint other web-robots make on your bandwidth allocation through specifying a Crawl-Delay. So, there is enough reasons to involve Magento robots. But it is crucial to do it the right way.Things You Should Know before

Before you decide to install Robots.txt file, you should know that its settings cover 1 domain at a time. In case you have any sub-domains (i.e. shop.example.com), a separate robots.txt is required for them. When you run multiple online stores, it makes sense to involve separate files for each of them. On the whole, the process of implementing Robots.txt function is very simple: it’s nothing but a text file, so anyone can create it quickly with the help of preferred text editors. You can choose between DreamWeaver, Notepad, vim, and other code editors. A range of different robots exists. For instance, Googlebot and Bingbot can be used as crawlers. What really matters is that once you’ve launched Robots.txt file, it is supposed to reside at the root: if your store domain is, for example, www.e-store.com, you should insert robots.txt under the domain root where app directory is also present. It will be accessed as www.e-store.com/robots.txt then. Saving this file under any directory or subdirectory is useless. Two more necessary considerations when using robots.txt for Magento site are:- This file is publicly available, so anyone can see the unwanted selections of your server

- The file may be ignored by the robots, especially malware that are able to scan the web for security vulnerabilities

Installation Process and Tips

There are several ways to install Magento Robots.txt. First, let us talk about how to do it manually. Since 2010, this file is available on the web. It is enough to copy the content we provide below to paste it in a newly created field named Robots.txt. Sitemap.xml location has to be changed before uploading the file into the site’s root (even if the Magento installation is in the subdirectory). This version of robots.txt is offered by byte.nl as an optimal one.|

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 |

# robots.txt # # This file is to prevent the crawling and indexing of certain parts # of your site by web crawlers and spiders run by sites like Yahoo! # and Google. By telling these “robots” where not to go on your site, # you save bandwidth and server resources. # # This file will be ignored unless it is at the root of your host: # Used: http://example.com/robots.txt # Ignored: http://example.com/site/robots.txt # # For more informationsk abocut the robots.txt standard, see: # http://www.robotstxt.org/wc/robots.html # # For syntax checking, see: # http://www.sxw.org.uk/computing/robots/check.html # # Prevent blocking URL parameters with robots.txt # Use Google Webmaster Tools > Crawl > Url parameters instead # Website Sitemap Sitemap: http://www.example.com/sitemap.xml # Crawlers Setup User-agent: * # Directories Disallow: /404/ Disallow: /app/ Disallow: /cgi-bin/ Disallow: /downloader/ Disallow: /admin/ Disallow: /errors/ Disallow: /includes/ #Disallow: /js/ Disallow: /lib/ Disallow: /magento/ #Disallow: /media/ Disallow: /media/captcha/ #Disallow: /media/catalog/ #Disallow: /media/css/ #Disallow: /media/css_secure/ Disallow: /media/customer/ Disallow: /media/dhl/ Disallow: /media/downloadable/ Disallow: /media/import/ #Disallow: /media/js/ Disallow: /media/pdf/ Disallow: /media/sales/ Disallow: /media/tmp/ Disallow: /media/wysiwyg/ Disallow: /media/xmlconnect/ Disallow: /pkginfo/ Disallow: /report/ Disallow: /scripts/ Disallow: /shell/ #Disallow: /skin/ Disallow: /stats/ Disallow: /var/ # Paths (clean URLs) Disallow: /index.php/ Disallow: /catalog/product_compare/ Disallow: /catalog/category/view/ Disallow: /catalog/product/view/ Disallow: /catalog/product/gallery/ Disallow: /catalogsearch/ Disallow: /checkout/ Disallow: /control/ Disallow: /contacts/ Disallow: /customer/ Disallow: /customize/ Disallow: /newsletter/ Disallow: /poll/ Disallow: /review/ Disallow: /sendfriend/ Disallow: /tag/ Disallow: /wishlist/ # Files Disallow: /api.php Disallow: /cron.php Disallow: /cron.sh Disallow: /error_log Disallow: /install.php Disallow: /LICENSE.html Disallow: /LICENSE.txt Disallow: /LICENSE_AFL.txt Disallow: /STATUS.txt Disallow: /get.php # Magento 1.5+ Disallow: /README.txt Disallow: /RELEASE_NOTES.txt # Do not index the general technical directories and files on a server Disallow: /cgi-bin / Disallow: /cleanup.php Disallow: /apc.php Disallow: /memcache.php Disallow: /phpinfo.php # Paths (no clean URLs) #Disallow: /*.js$ #Disallow: /*.css$ Disallow: /*.php$ Disallow: /*?SID= Disallow: /rss* Disallow: /*PHPSESSID |

Another way to install Robots.txt for Magento, is to follow these simple guide lines:

- Download the robots.text file first (there are a lot of sources available).

- Whenever your Magento is installed within a subdirectory, you will have to modify the robots.txt file correspondingly. It means, for instance, changing ‘Disallow: /404/’ to ‘Disallow: /your-sub-directory/404/’ and ‘Disallow: /app/’ to ‘Disallow: /your-sub-directory/app/’.

- Check if the domain you use has a sitemap.xml and add URL to your sitemap.xml afterwards.

- It’s time to upload the robots.txt file to your root folder. Just place the file within ‘httpdocs/’ directory. It can be done in two ways: by logging in your Control Panel with your credentials and via FTP client of your preference.

Useful tools to check your Robot.txt

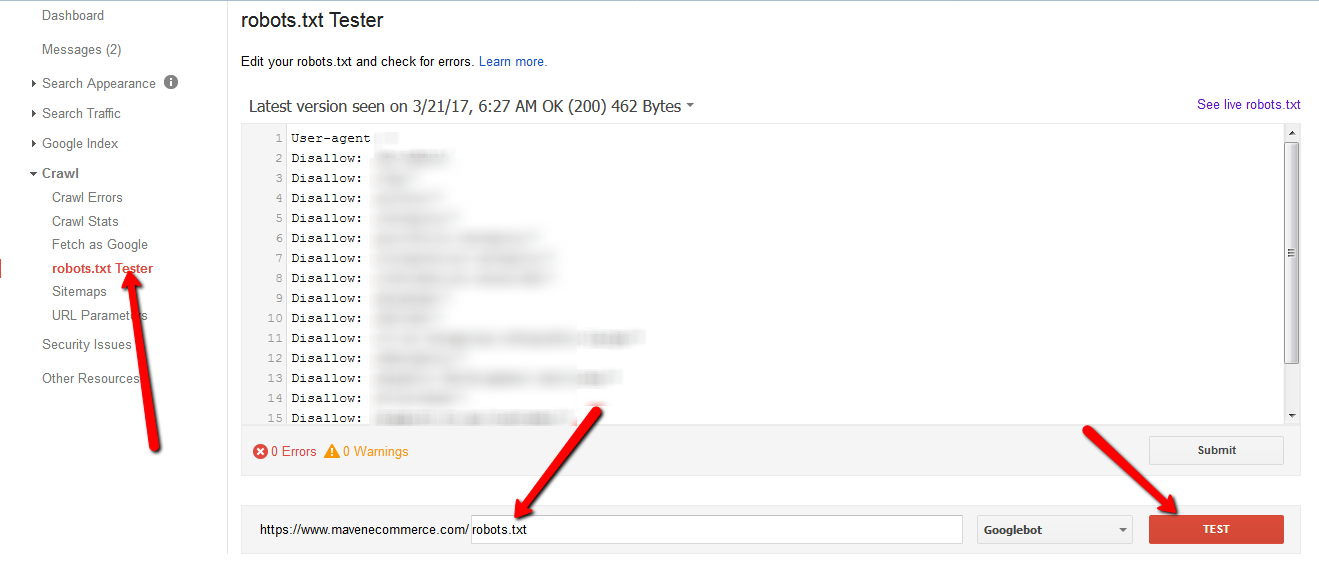

To make sure that you set up your Robots.txt right try to use one of the tools listed below. They’ll help you to analyze the code and fix mistakes if there’s any. The easiest and the most reliable way to do so is to use Google Webmaster Tools. It allows you to check your robots.txt for free right from your admin panel. To do so you should:- Go to Google Webmasters

- Click on «Search Console»

- Type your website’s name

- Click on «Crawl» in the Dashboard panel

- Choose «Robots.txt Tester» in the drop-down menu

- Type «Robots.txt» in the line after the slash

- Hit the «Test» button

- Enjoy

If you want to try some other options and for some reasons avoid using Google Webmaster Tools, pay attention to these tools, which are also can help you to check settings:

If you want to try some other options and for some reasons avoid using Google Webmaster Tools, pay attention to these tools, which are also can help you to check settings:

- http://tools.seobook.com/robots-txt/analyzer

- http://www.sxw.org.uk/computing/robots/check.html

- https://webmaster.yandex.com/tools/robotstxt

For Magento Backend

This one involves applying an extension for robots.txt file. Instead of doing the whole job in hand, you can download special tools to generate robots.txt for Magento. Via the settings you can alter some main options. The good thing is that you can add own rules in addition to standard settings.Reindexing robots.txt

Search engines often read changed Magento robots.txt for too long. Such tools as GWT can point at the time when your site was last indexed. If you want Google or other search engines to get the up-dated version sooner than in 24 hours or a hundred of visits, you can use Header Cache-Control in your .htaccess file. Apply this statement to your .htaccess file:|

1 2 3 4 5 |

<filesmatch ".(txt)$"> <IfModule mod_headers.c> Header set Cache-Control "max-age=60, public, must-revalidate" </IfModule> </filesmatch> |